OpenAI introduced the new version of the generative artificial intelligence GPT-4o, which will power the ChatGPT chatbot. The letter O stands for omni.

The new AI version GPT-4o responds to voice on average within 320 milliseconds, comparable to conversation response time, according to developers. It better perceives images and audio compared to existing models.

GPT-4o will be available to users starting May 13th. However, access to voice functions will initially be available only to a "small group of trusted partners" in the coming weeks, and then to paid subscribers in June.

GPT-4o is an updated version of the GPT-4 model with a number of improvements and additional capabilities. Here are some of the key differences:

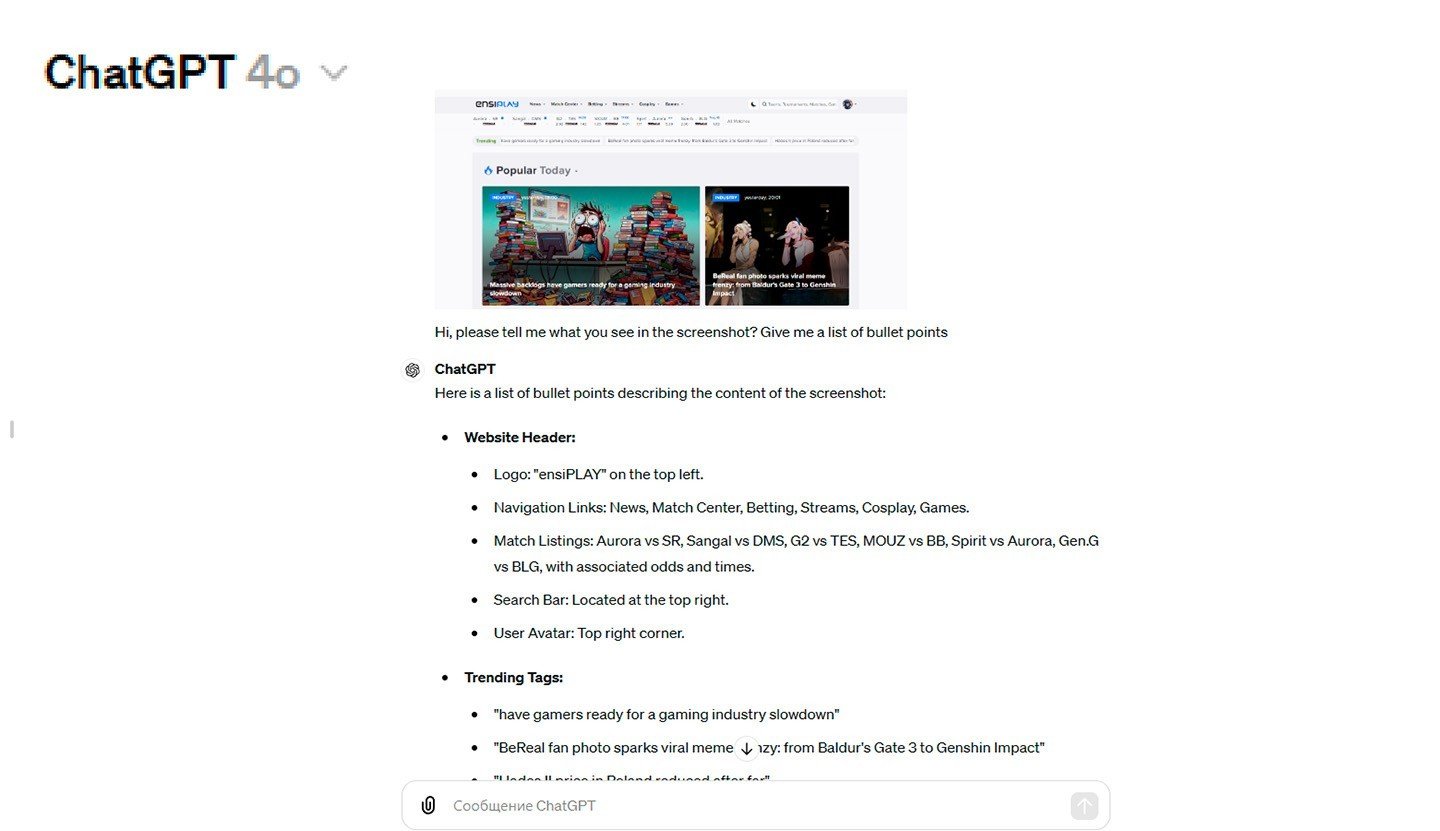

- Much better perceives and analyzes images, including graphs, charts, and screenshots, compared to existing models.

Imagem: GPT-4o: trabalhando com imagens, Ensigame

Imagem: GPT-4o: trabalhando com imagens, Ensigame

- Deeper understanding of complex queries: The model has improved at tasks requiring in-depth analysis and understanding of complex concepts.

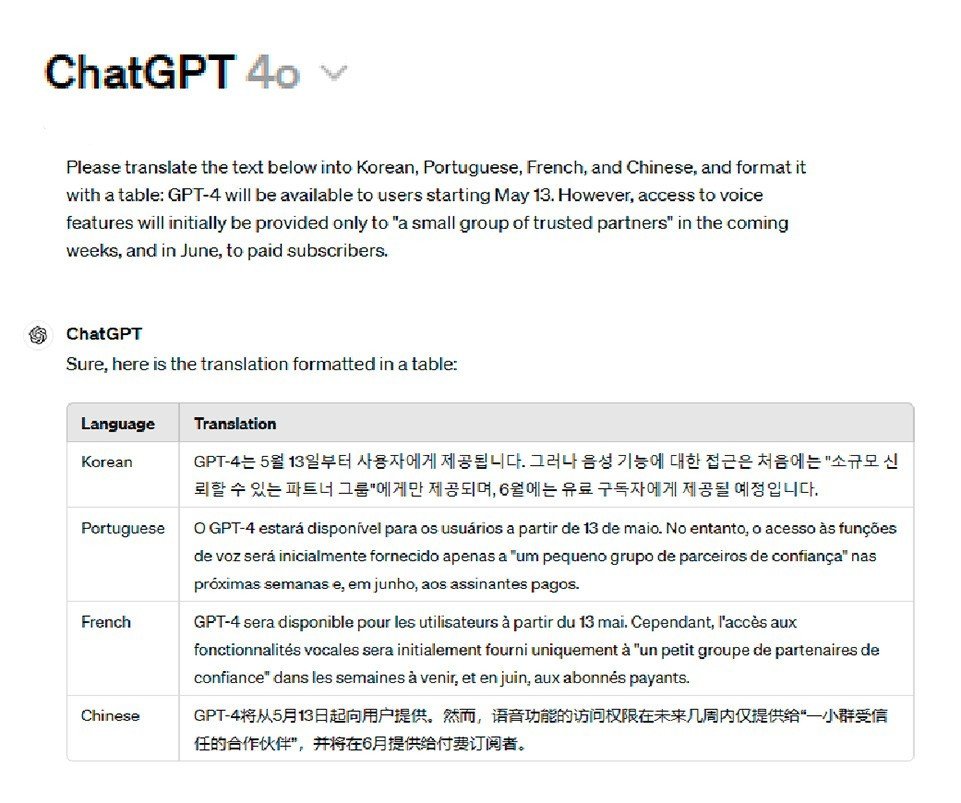

Imagem: GPT-4o: tradução para diferentes grupos de idiomas , Ensigame

Imagem: GPT-4o: tradução para diferentes grupos de idiomas , Ensigame

- Enhanced multi-language capability: GPT-4o supports more languages and improves translation quality and understanding of texts in different languages.

- Communicates by voice "like a human", changing intonations.

- Improved memory: the model remembers all conversations with the user.

- Matches GPT-4 Turbo performance for English text and code.

- Utilizes both its own knowledge and data from the Internet.

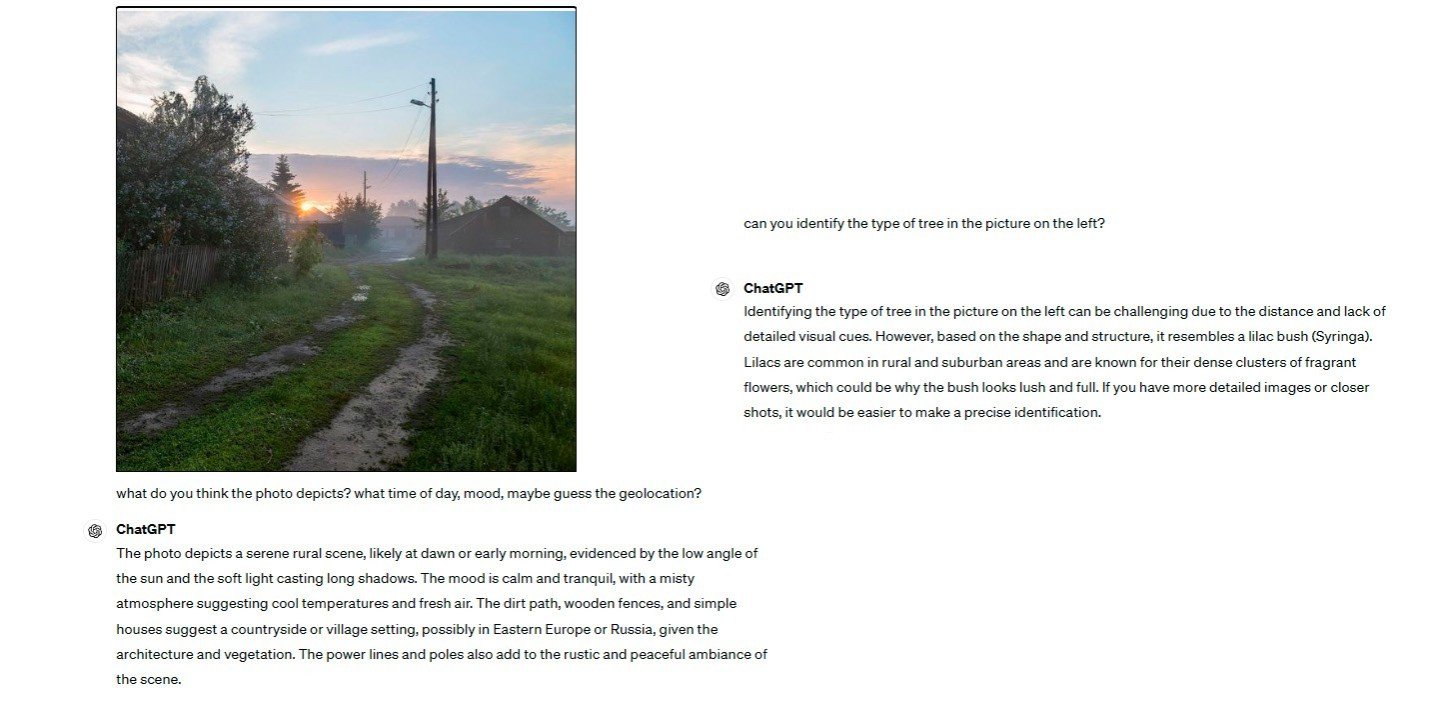

Imagem: GPT-4o: reconhece objetos em imagens de baixa qualidade, Ensigame

Imagem: GPT-4o: reconhece objetos em imagens de baixa qualidade, Ensigame

The new GPT-4 Omni model will be free for all users. It is available as a desktop app where you can show it your entire screen and ask it to perform any actions, edit code, provide advice, etc. Essentially, you now have a personal assistant who can always see your screen.

Main image: Ensigame

Daria "Foxdari" Sedelnikova

Daria "Foxdari" Sedelnikova

0 comments